Abstract:

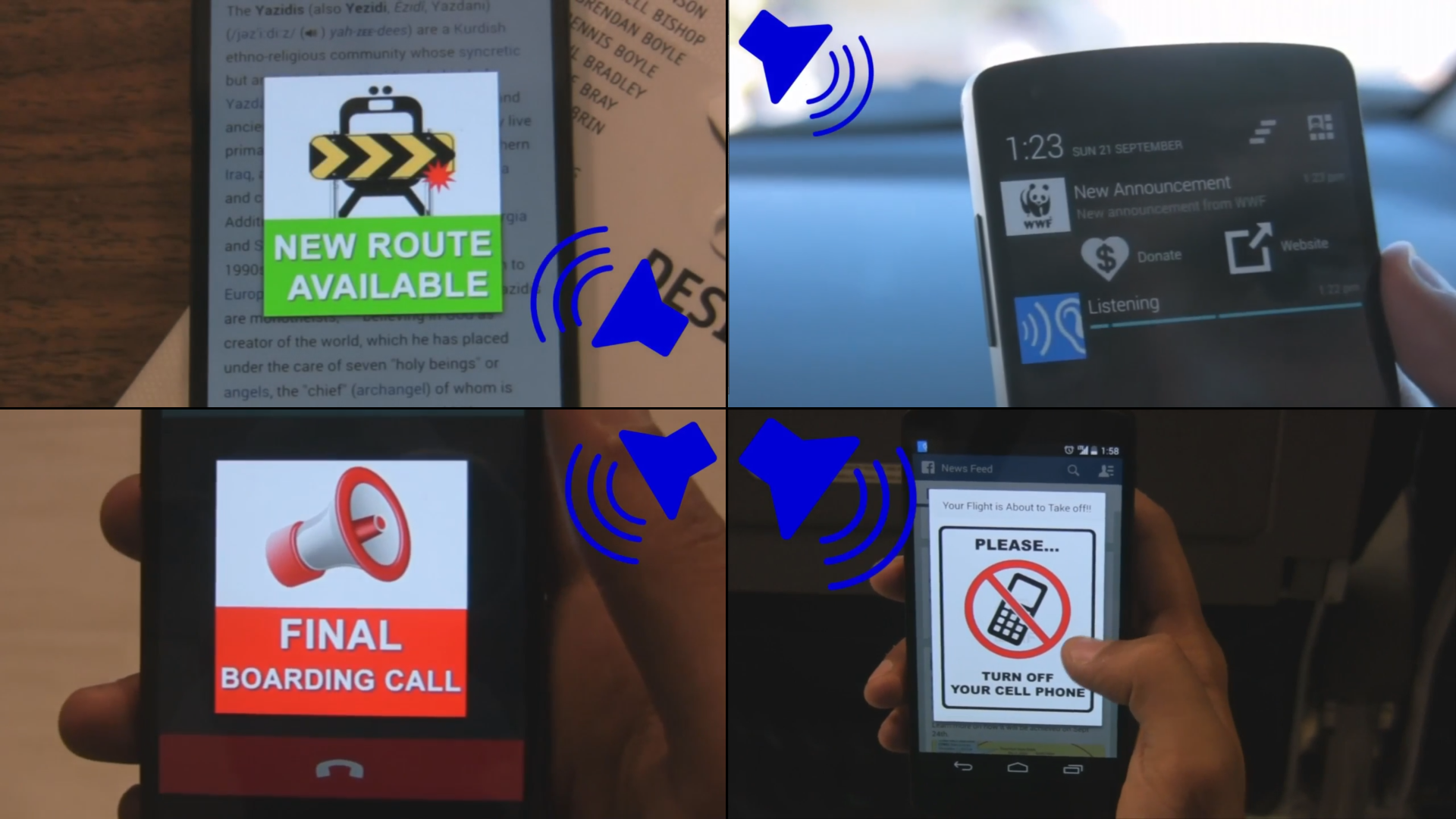

We present PhoneEar, a new approach that enables mobile

devices to understand the broadcasted audio and sounds that

we hear every day using existing infrastructure. PhoneEar

audio streams are embedded with sound-encoded data using

nearly inaudible high frequencies. Mobile devices then

listen for messages in the sounds around us, taking actions

to ensure we don’t miss any important info. In this paper,

we detail our implementation of PhoneEar, describe a study

demonstrating that mobile devices can effectively receive

sound-based data, and describe the results of a user study

that shows that embedding data in sounds is not detrimental

to sound quality. We also exemplify the space of new

interactions, through four PhoneEar-enabled applications.

Finally, we discuss the challenges to deploying apps that

can hear and react to data in the sounds around us.

Researchers: Aditya Shekhar Nittala, Xing-Dong Yang, Scott Bateman, Ehud Sharlin, Saul Greenberg

Video:

Image: